智能文章系统实战-Hadoop海量数据统计(14)

admin 发布于:2018-7-10 17:15 有 3009 人浏览,获得评论 0 条

1.把hadoop添加到环境变量

#vi /etc/profile

export HADOOP_HOME=/usr/local/soft/Hadoop/hadoop

export PATH=${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:$PATH

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

#source /etc/profile

2.启动hadoop

#hdfs namenode -format #start-all.sh #hadoop fs -mkdir -p HDFS_INPUT_PV_IP

3.查看案例日志

#cat /var/log/nginx/news.demo.com.access.log-20180701 192.168.100.1 - - [01/Jul/2018:15:59:48 +0800] "GET http://news.demo.com/h5.php HTTP/1.1" 200 3124 "-" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36" "-" 192.168.100.1 - - [01/Jul/2018:16:00:03 +0800] "GET http://news.demo.com/h5.php?action=show&id=128 HTTP/1.1" 200 1443 "http://news.demo.com/h5.php" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36" "-" 192.168.100.1 - - [01/Jul/2018:16:00:22 +0800] "GET http://news.demo.com/h5.php HTTP/1.1" 200 3124 "-" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36" "-" 192.168.100.1 - - [01/Jul/2018:16:00:33 +0800] "GET http://news.demo.com/h5.php?action=show&id=89 HTTP/1.1" 200 6235 "http://news.demo.com/h5.php" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36" "-"

4. 采集日志数据 (sh 脚本,hadoop 脚本,实际使用 配置计划任务每天00:01分处理昨天的日志)

#vi hadoopLog.sh

#!/bin/sh

#昨天的日期

yesterday=$(date --date='1 days ago' +%Y%m%d)

#测试案例日志日期

yesterday="20180701"

#hadoop命令行上传文件

hadoop fs -put /var/log/news.demo.com.access.log-${yesterday} HDFS_INPUT_PV_IP/${yesterday}.log

#sh hadoopLog.sh

5. Hadoop处理日志数据PHP之MAP

#vi /usr/local/soft/Hadoop/hadoop/demo/StatMap.php

<?php

error_reporting(0);

while (($line = fgets(STDIN)) !== false)

{

if(stripos($line,'action=show')>0)

{

$words = preg_split('/(\s+)/', $line);

echo $words[0].chr(9).strtotime(str_replace('/',' ',substr($words[3],1,11))." ".substr($words[3],13)).chr(9).$words[6].PHP_EOL;

}

}

?>

6. Hadoop处理日志数据PHP之Reduce

#vi /usr/local/soft/Hadoop/hadoop/demo/StatReduce.php

<?php

error_reporting(0);

$fp=fopen('/tmp/pvlog.txt','w+');

$pvNum=0;

$ipNum=0;

$ipList=array();

while (($line = fgets(STDIN)) !== false)

{

$pvNum=$pvNum+1;

$tempArray=explode(chr(9),$line);

$ip = trim($tempArray[0]);

if(!in_array($ip,$ipList))

{

$ipList[]=$ip;

$ipNum=$ipNum+1;

}

//把每行的详细数据记录文件中,用户HIVE统计和HBASE详细记录

fwrite($fp,$line);

}

fclose($fp);

//把统计的插入MYSQL数据库

$yestoday=date("Y-m-d",time()-86400); //实际统计昨天的数据

$yestoday='2018-07-01'; //以2018-07-01的日志进行测试

$mysqli = new mysqli('localhost', 'root', '', 'article');

$sql="INSERT INTO stat SET stat_date='{$yestoday}',pv={$pvNum},ip={$ipNum}";

$mysqli->query($sql);

$mysqli->close();

echo "DATE=".$yestoday.PHP_EOL;

echo "PV=".$pvNum.PHP_EOL;

echo "IP=".$ipNum.PHP_EOL;

?>

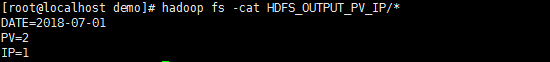

7. Hadoop处理日志数据(流方式)

#hadoop jar /usr/local/soft/Hadoop/hadoop/share/hadoop/tools/lib/hadoop-streaming-2.9.1.jar -mapper /usr/local/soft/Hadoop/hadoop/demo/StatMap.php -reducer /usr/local/soft/Hadoop/hadoop/demo/StatReduce.php -input HDFS_INPUT_PV_IP/* -output HDFS_OUTPUT_PV_IP8.查看统计结果

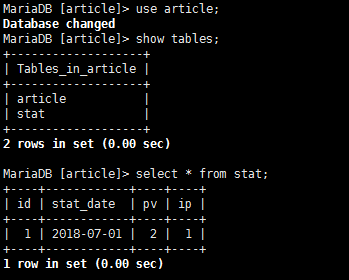

9.查看数据库的统计结果

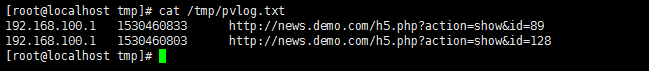

10.查看/tmp/pvlog.txt的清洗结果

11.重复运行处理数据,需要删除已经存在的输出目录

#hadoop fs -rm -r -f HDFS_OUTPUT_PV_IP

12.案例命令集合

#hadoop fs -mkidr -p HDFS_INPUT_PV_IP #hadoop fs -put /var/log/nginx/news.demo.com.access.log-20180701 HDFS_INPUT_PV_IP #hadoop jar /usr/local/soft/Hadoop/hadoop/share/hadoop/tools/lib/hadoop-streaming-2.9.1.jar -mapper /usr/local/soft/Hadoop/hadoop/demo/StatMap.php -reducer /usr/local/soft/Hadoop/hadoop/demo/StatReduce.php -input HDFS_INPUT_PV_IP/* -output HDFS_OUTPUT_PV_IP #hadoop fs -cat HDFS_OUTPUT_PV_IP/* MariaDB [article]> select * from stat;